But how do AI videos actually work? | Guest video by @WelchLabsVideo

Información de descarga y detalles del video But how do AI videos actually work? | Guest video by @WelchLabsVideo

Autor:

3Blue1BrownPublicado el:

25/7/2025Vistas:

78.1KDescripción:

Videos similares: But how do AI videos actually work

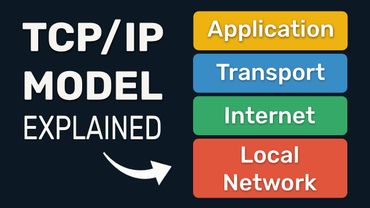

How the TCP/IP Model Actually Works | CCNA Day 3

Unlock Documentary Editing Secrets: How to Edit Like Magnates Media & Transform Your Videos

Rigid Body Physics for Beginners (Blender Tutorial)

Informatica Full Course | Informatica Tutorial For Beginners | What Is Informatica | Intellipaat

Fundamentals of Partnership Class 12 One Shot | Accounts Chapter 1 | Gaurav Jain