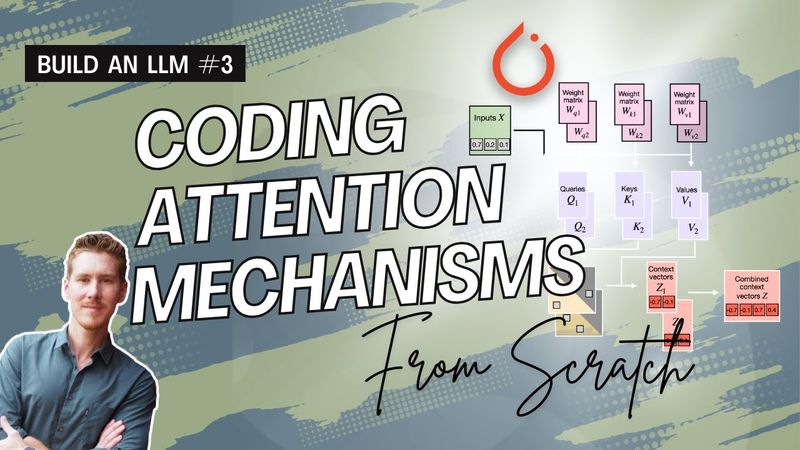

Build an LLM from Scratch 3: Coding attention mechanisms

Download information and video details for Build an LLM from Scratch 3: Coding attention mechanisms

Uploader:

Sebastian RaschkaPublished at:

3/11/2025Views:

5.5KDescription:

Links to the book: - (Amazon) - (Manning) Link to the GitHub repository: This is a supplementary video explaining how attention mechanisms (self-attention, causal attention, multi-head attention) work by coding them from scratch. 00:00 3.3.1 A simple self-attention mechanism without trainable weights 41:01 3.3.2 Computing attention weights for all input tokens 52:40 3.4.1 Computing the attention weights step by step 1:12:33 3.4.2 Implementing a compact SelfAttention class 1:21:00 3.5.1 Applying a causal attention mask 1:32:33 3.5.2 Masking additional attention weights with dropout 1:38:05 3.5.3 Implementing a compact causal self-attention class 1:46:55 3.6.1 Stacking multiple single-head attention layers 1:58:55 3.6.2 Implementing multi-head attention with weight splits You can find additional bonus materials on GitHub: Comparing Efficient Multi-Head Attention Implementations, Understanding PyTorch Buffers,

Similar videos: Build an LLM from Scratch

A Toonsquid 2D Animation Tutorial

Atmospheric Effects in Premiere Pro: Beginner vs Pro Techniques

Визитка программиста

This NEW UNIQUE BELT enables POWERFUL TECH in 0.3! | Path of Exile 2: The Third Edict

Tutorial: Making a Mechanical Walking Creature in Blender