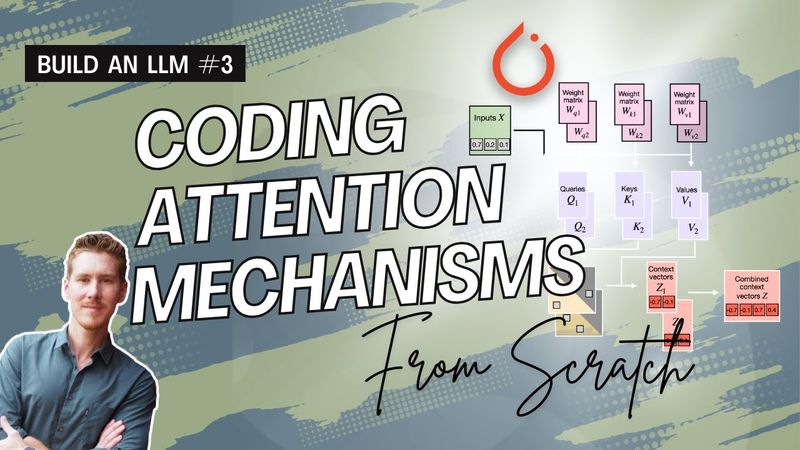

Build an LLM from Scratch 3: Coding attention mechanisms

Build an LLM from Scratch 3: Coding attention mechanisms 视频的下载信息和详情

作者:

Sebastian Raschka发布日期:

2025/3/11观看次数:

5.5K简介:

相似视频:Build an LLM from Scratch

A Toonsquid 2D Animation Tutorial

Atmospheric Effects in Premiere Pro: Beginner vs Pro Techniques

Визитка программиста

This NEW UNIQUE BELT enables POWERFUL TECH in 0.3! | Path of Exile 2: The Third Edict

Tutorial: Making a Mechanical Walking Creature in Blender